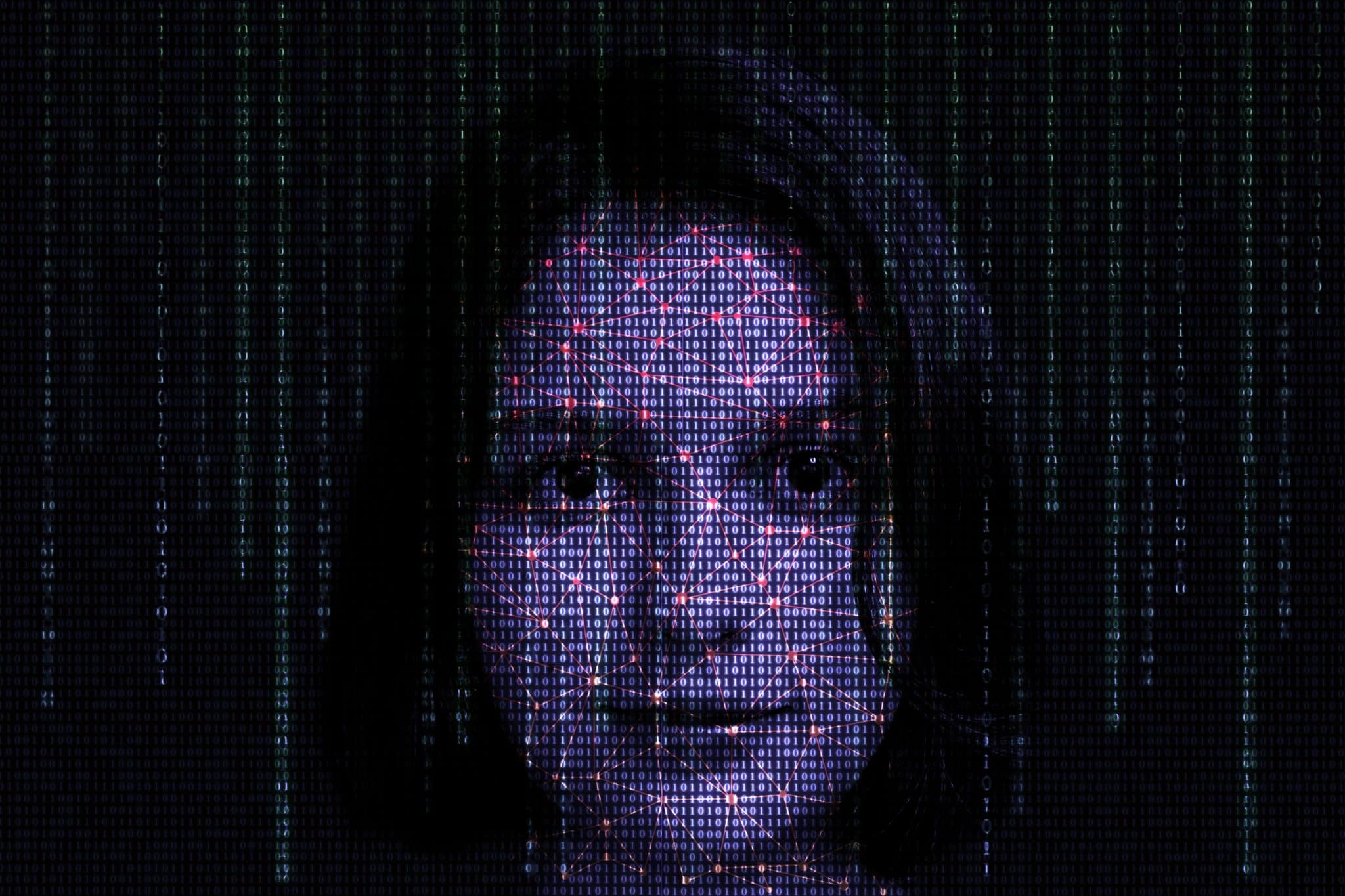

On Friday, leading tech companies including Meta, Microsoft, and TikTok pledged to take action against AI-generated content aimed at deceiving voters ahead of critical elections worldwide this year.

The agreement commits these companies, along with Google and OpenAI, to develop methods for identifying, labeling, and controlling AI-generated images, videos, and audio that seek to mislead voters.

Nick Clegg, Meta’s president of global affairs, emphasized the importance of involving all stakeholders, from content creation to user consumption, in combating deceptive content. The pledge, signed by 20 companies, was unveiled at the Munich Security Conference in Germany and included X (formerly Twitter), Snap, Adobe, LinkedIn, Amazon, and IBM.

The accord proposes various measures, such as watermarking or tagging AI-generated content at its source, though acknowledging the limitations of such solutions. Additionally, the signatories committed to collaborating on detecting and addressing deceptive election material on their platforms, which may involve annotating content to indicate its AI-generated nature.

Meta, Google, and OpenAI have already agreed to adopt a common watermarking standard for images generated by their AI applications, like OpenAI’s ChatGPT, Microsoft’s Copilot, or Google’s Gemini (formerly Bard).

This initiative emerges amid growing concerns that AI-powered tools could be exploited in pivotal election periods. European Commission Vice President Vera Jourova, present at the Munich event, welcomed the acknowledgment from tech companies regarding the risks posed by AI to democracy. However, she also stressed the need for governments to take responsibility, particularly as the EU prepares for upcoming elections to the European Parliament in June.

Recent incidents, including a convincing AI deepfake robocall impersonating US President Joe Biden and the use of AI-generated speeches by Pakistan’s ruling party, underscore the urgency of addressing the misuse of AI technology in electoral contexts.

+ There are no comments

Add yours